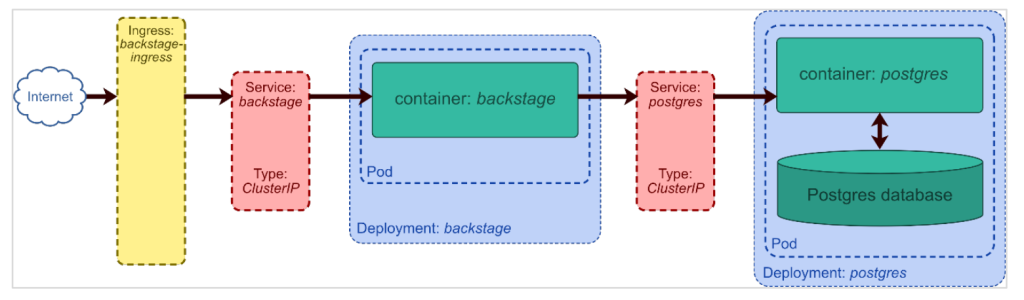

In the last blog post, we talked a lot of the installation and the architecture of Backstage. We also gave some design considerations for the Kubernetes installation. Now it is time to come up with some details for the Kubernetes installation.

We will use our design from the last blog post as a basis:

In the following, we will use a namespace called “backstage” for the installation. If you don’t have it so far, simply create it with

kubectl create ns backstagePostgres Database

Let’s begin with the Kubernetes Deployment for the Postgres database. We need the following components:

- A Persistent Volume Claim for storing the data

- A Kubernetes Secret to store the credentials

- The Postgres Deployment itself

- A service manifest to expose the deployment

Lets begin with the Persistent Volume Claim:

# kubernetes/postgres-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgres-pvc

namespace: backstage

spec:

storageClassName: standard

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2GNow continue with the Secrets manifest. Remember that the data is base64 encoded. So if our user is “backstage”, the password is “test” and the port is 5432, the corresponding YAML looks like the following:

# kubernetes/postgres-secrets.yaml

apiVersion: v1

kind: Secret

metadata:

name: postgres-secrets

namespace: backstage

type: Opaque

data:

POSTGRES_USER: YmFja3N0YWdl

POSTGRES_PASSWORD: dGVzdA==

POSTGRES_SERVICE_PORT: NTQzMgo=Now let’s continue with the deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres

namespace: backstage

spec:

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres:13.2-alpine

imagePullPolicy: 'IfNotPresent'

ports:

- containerPort: 5432

envFrom:

- secretRef:

name: postgres-secrets

volumes:

- name: postgresdb

persistentVolumeClaim:

claimName: postgres-pvcNow, we expose the Postgres deployment as a Service:

# kubernetes/postgres-service.yaml

apiVersion: v1

kind: Service

metadata:

name: postgres

namespace: backstage

spec:

selector:

app: postgres

ports:

- port: 5432Backstage Components

After having the database running in our Kubernetes cluster, we can continue with Backstage installation itself. We need the following components:

- A Secret manifest for storing credentials. This includes values for Backstage like GitHub Tokens or docker config json files.

- The Backstage deployment

- The Backstage service

- An ingress

Let’s begin with the secret:

# kubernetes/backstage-secrets.yaml

apiVersion: v1

kind: Secret

metadata:

name: backstage-secrets

namespace: backstage

type: Opaque

data:

GITHUB_TOKEN: Z2hwX0Q3VGfc bayernblablawdsFsSnEwOW44RW1JMm0fcn2OEZrSDfuerthROMaufstiegDdJQg==

---

apiVersion: v1

kind: Secret

metadata:

name: pull-secrets

namespace: backstage

type: kubernetes.io/dockerconfigjson

data:

.dockerconfigjson: esadfjlegojksdfalkideenbuchsfd6000sdfjkls25Fd09XNDRSVzFKTW0wzasfdsfdoiURkSlFnPTfs0ifX19The following manifest depicts the Backstage deployment (remember you need your backstage container image stored in some registry):

# kubernetes/backstage.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: backstage

namespace: backstage

spec:

replicas: 1

selector:

matchLabels:

app: backstage

template:

metadata:

labels:

app: backstage

spec:

containers:

- name: backstage

image: ghcr.io/my-backstage-project/backstage:0.1

imagePullPolicy: Always

ports:

- name: http

containerPort: 7007

envFrom:

- secretRef:

name: postgres-secrets

- secretRef:

name: backstage-secrets

imagePullSecrets:

- name: pull-secrets

# Uncomment if health checks are enabled in your app:

# https://backstage.io/docs/plugins/observability#health-checks

# readinessProbe:

# httpGet:

# port: 7007

# path: /healthcheck

# livenessProbe:

# httpGet:

# port: 7007

# path: /healthcheckNow let’s expose backstage as a service:

# kubernetes/backstage-service.yaml

apiVersion: v1

kind: Service

metadata:

name: backstage

namespace: backstage

spec:

selector:

app: backstage

ports:

- name: http

port: 80

targetPort: httpFinally, we need an Ingress:

# kubernetes/ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

namespace: backstage

name: backstage-ingress

spec:

defaultBackend:

service:

name: backstage

port:

number: 80

Setting up an Ingress differs a little bit in every Kubernetes distribution. In the following, we use Google Kubernetes Engine and the manifest would automatically create a Google Load Balancer. In addition, we don’t you HTTPs for our deployment. Setting up encryption would need an certificate and some configuration. However, as we want to concentrate on Backstage, we will skip these configuration here.

That’s it for this blog bost. In the next post, we will focus on some Backstage internals.

> Click here for Part 10: Working with Software Templates and Backstage Search