Introduction

This is the last blog for our Tekton tutorial. In the last blog posts, we discussed how to install and configure Tekton. Now we want to briefly discuss how to configure Tekton Chains and the Tekton dashboard.

Tekton Chains

As discussed, an important requirement of the SLSA framework is attestation. Tekton provides Tekton Chains for that, which is part of the Tekton ecosystem.

In addition to generating attestation, Tekton Chains makes it possible to sign task run results with, for example, x.509 certificates or a KMS and store the signature in one of numerous storage backends. Normally the attestation with the artifact is stored within the OCI store. It is also possible to define a dedicated storage location independent of the artifact. Alternative storage options include Sigstor’s record servers, document stores such as Firestore, DynamoDB and Mongo and Grafeas.

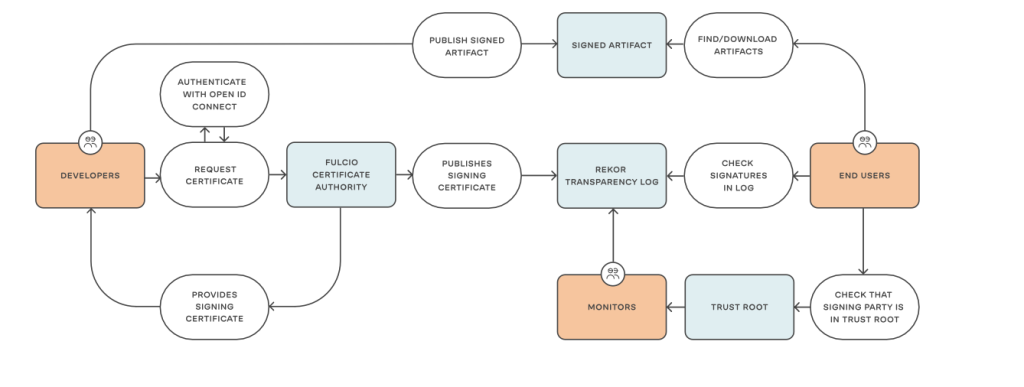

An important aspect of Tekton Chains is the ability to integrate the Sigstor project. This also refers to Fulcio and Rekor, which were explained in more detail here. SLSA’s requirements for provenance, (which can be solved by Rekor and Fulcio), were that keys must be stored within a secure location and that there is no possibility for the tenant to subsequently change the attestation in any way . Although key management via a KMS is just as valid as using Fulcio and both solutions would meet the requirements of SLSA, Rekor, in particular, satisfies the requirement of immutability. As already mentioned, Rekor’s core is based on Merkle trees, which make deletion or modification impossible. Both Fulcio and Rekor represent an important trust connection between producer and consumer through the services provided by Sigstore.

Tekton Chains offers the advantage that neither the signature nor the attestation need to be provided through custom steps in the pipeline itself. For example, even if a developer integrates Cosign into a task to sign an image, Chains works regardless. The only requirement in the pipeline are the so-called ‘Results’. These allow Tekton to clearly communicate which artifacts should be signed. Results have two areas of application. A result can be passed through the pipeline into the parameters of another task or into the when functionality, or the data of a result can be output.

The data output by results serves the user as a source of information, for example about which digest a built container image has or which commit SHA a cloned repository has. The Tekton Chains Controller uses Results to determine which artifacts should be attested. The controller searches for results of the individual tasks with the ending “*_IMAGE_URL” and “*IMAGE_DIGEST”, where the IMAGE URL is the URL to the artifact, IMAGE_DIGEST is the digest of the artifact and the asterisk is any name.

Tekton Dashboard

The Tekton dashboard is a powerful tool that makes managing Tekton easier. The dashboard can be used in two modes: read only mode or read/write mode.

The authorizations of the dashboard can be configured by means service accounts, as is typical for Kubernetes. However, this poses a problem because the dashboard itself does not come with authentication or authorization in either mode. There are no options for regulating the dashboard’s permissions through RBAC. In this case, RBAC would only apply to the dashboard’s ServiceAccount, but not to all users. In practice, this means that all authorizations that the Tekton Dashboard service account has also apply to the person accessing the dashboard. This is a big problem, especially if the dashboard is public accessible.

Kubernetes does not have native management of users because – unlike service accounts – they are not manageable objects of the API server. For example, it is not possible to regulate authentication via user name and password. However, there are several authentication methods that use certificates, bearer tokens or authentication proxy.

Two of these methods can be used to secure the Tekton dashboard. On the one hand, OIDC tokens and on the other hand, Kubernetes user impersonation.

OIDC is an extension of the Open Authorization 2.0 (OAuth2) framework. The OAuth2 framework is an authorization framework that allows an application to carry out actions or gain access to data on behalf of a user without having to use credentials. OIDC extends the functionality of OAuth 2.0 by adding standardizations for user authentication and provision of user information.

Kubernetes user impersonation allows a user to impersonate another user. This gives the user all the rights of the user they are posing as. Kubernetes achieves this through impersonation headers. The user information of the actual user is overwritten with the user information of another user when a request is made to the Kubernetes API server before authentication occurs.

There are different tools to achieve this. One of this tools is Open Unison from Tremolo. Open Unison offers some advantages. It is possible to implement single sign-on (SSO) for graphical user interfaces and session-based access to Kubernetes via the command line interface. When using Openunison or similar technologies, communication no longer takes place directly with the Kubernetes API server, but rather runs via Open Unison. Open Unison uses Jetstack’s reverse proxy for OIDC.

When a user wants to access the Tekton dashboard, Open Unison redirects the user to the configured Identity Provider (IDP). After the user has authenticated himself with the IDP, he receives an id_token. The id_token contains information about the authenticated user such as name, email, group membership or the token expiration time. The id_token is a JavaScript Object Notation Web Token (JWT)

The reverse proxy uses the IDP’s public key to get the id_to to validate. After successful validation, the reverse proxy appends the Impersonation header to the request to the Kubernetes API server. The Kubernetes API server checks the Impersonation header to see whether the impersonated user has the appropriate permissions to execute the request. If so, the Kubernetes API server executes the request as an impersonated user. The reverse proxy then forwards the response it received from the Kubernetes API server to the user.

The following steps describe the configuration of the dashboard with OAuth2:

Create a namespace:

kubectl create ns consumerrbac

Installation of Cert Manager:

helm install \

cert-manager jetstack/cert-manager \

–namespace consumerrbac \

–version v1.11.0 \

–set installCRDs=true

In order to create certificates, an issuer is needed:

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

name: letsencrypt-prod

namespace: consumerrbac

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email:

privateKeySecretRef:

name: letsencrypt-prod

solvers:

– http01:

ingress:

class: nginx

Now nginx, can be installed:

helm install nginx-ingress ingress-nginx/ingress-nginx –namespace consumerrbac

Now, the ingress can be created.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: tekton-dashboard

namespace: tekton-pipelines

annotations:

kubernetes.io/ingress.class: “nginx”

nginx.ingress.kubernetes.io/auth-url: http://oauth2-proxy.consumerrbac.svc.cluster.local/oauth2/auth

nginx.ingress.kubernetes.io/auth-signin: https://dashboard.35.198.151.194.nip.io/oauth2/sign_in?rd=httpd://$host$request_uri

nginx.ingress.kubernetes.io/ssl-redirect: “false”

spec:

rules:

– host: dashboard.35.198.151.194.nip.io

http:

paths:

– pathType: Prefix

path: /

backend:

service:

name: tekton-dashboard

port:

number: 9097

OAuth Proxy (we use Google and exptect the application created there):

- In the Google Cloud dashboard, select APIs & Services

- On the left, select Credentials

- Press CREATE CREDENTIALS and select O Auth client ID

- For Application Type, select Web application

- Give the app a name and enter Authorized JavaScript origins and Authorized redirect URIs

- Click Create and remember Client ID and Client Secret

- A values.yaml must be created for the installation.

config:

clientID:

clientSecret:

extraArgs:

provider: google

whitelist-domain: .35.198.151.194.nip.io

cookie-domain: .35.198.151.194.nip.io

redirect-url: https://dashboard.35.198.151.194.nip.io/oauth2/callback

cookie-secure: ‘false’

cookie-refresh: 1h

Configuration of Open Unison

Create a namespace:

kubectl create ns openunison

Add Helm repo:

helm repo add tremolo https://nexus.tremolo.io/repository/helm/

helm repo update

Before openunison can be deployed, the oauth must be configured in the Google Cloud.

- In Credentials, under Apis & Services, click CREATE CREDENTIALS

- Then on OAuth Client ID

- Select Web application as the application type and then give it a name

- Authorized JavaScript origins: https://k8sou.apps.x.x.x.x.nip.io

Now Open Unison can be installed:

helm install openunison tremolo/openunison-operator –namespace openunison

Finally, Open Unison has to be configured with the appropriate settings.

This concludes our series on Tekton. Hope you enjoyed it.